A website isn't a filing cabinet, but somehow, yours has become a PDF repository. No one sets out to build a digital monument to the PDF. How did this happen? And what are you going to do about it?

Why PDFs are a problem

PDFs are a barrier between you and your users. They are text-heavy and dense and usually lack their host site's visual context and navigability. Don't serve your audience PDF content that could otherwise be a web page. Good old-fashioned HTML is more accessible, easier to update and maintain, and provides a better overall user experience.

- Accessibility. Many PDFs are not created to be accessible to assistive technology. This excludes parts of your audience and leaves your organization vulnerable to lawsuits.

- Forced downloads. Requiring folks to download a document to complete a task is an extra step. Most won't want to take it. Some users, especially on mobile devices, won't know where to find the download, ending their journey. Users with low bandwidth or limited data plans may not want to download large files.

- Search and findability. Content hidden in a PDF is also hidden from simple search. Using CTRL+F on an HTML page won't find content in a PDF attached to that page. Without internal navigation, PDFs are hard to navigate and scan for keywords.

- Obsolescence. Old PDFs lingering on your site can turn up in search results. They may provide information that's inaccurate, out of date, or worse.

- Screen compatibility. PDFs are formatted for a particular size, usually intended for print. Users on laptops, tablets, and mobile devices must pinch and zoom to navigate the content.

How to solve your PDF problem

Before you can solve a problem, you have to know its size. First, audit your PDFs. Decide which to tackle first, then convert them to HTML content, link to external sources, or modify PDFs to meet accessibility standards.

Audit

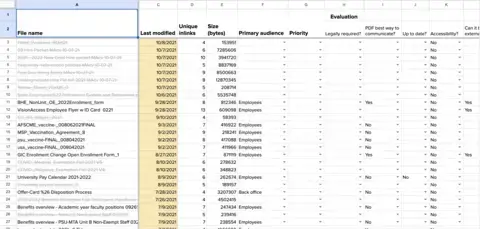

To get a sense of the scale of your PDF problem, perform a PDF audit. Collect a list of all the PDFs on your site and review them one by one. PDF content that meets the criteria below will likely work well as HTML content.

-

Is the content all text and fewer than five pages?

-

Is a PDF the best way to communicate this information to our audience?

-

Is this PDF up to date? Will it need to be updated often?

-

Can it be made into website content or a web form?

-

Do we need to host this content, or can we link to it elsewhere?

-

Can it be deleted?

Download our starter template to start evaluating your PDFs.

To find links to all the PDFs on your website, use web crawler software like Screaming Frog or compile them manually.

Any time you're tempted to create a new PDF, run through these questions again.

Prioritize

Once you understand the scope of the problem, it's time to set some priorities. Get content to people who need it faster by focusing on the most important stuff first. Plus, priorities help keep overwhelm at bay.

-

Audience. Who is the content for? Tackle PDFs relevant to your primary audience first. Then, move on to content for secondary and tertiary audiences.

-

Traffic. Use analytics tools to understand which of your PDFs gets the most traffic. Start there.

-

Timeliness. Figure out when this content was last updated. Fresh, new content, or content that changes frequently, is a good candidate to tackle early on.

These are a starting point. Don't be afraid to set your own priorities.

Convert

There's no way around it: converting PDF content to HTML is work.

You must restructure the PDF content as HTML, adding headers, lists, and links. You may need to rewrite the content entirely! The page structure, content hierarchy, and even the voice and tone may be different on a web page than they were in print. This change requires human input. Set aside enough time to meet your goals, and work off your prioritized list.

Link

Don't reproduce content locally that is available at other web sources. Link to reliable external web content. Link to documents issued by government agencies instead of hosting them or recreating them.

Mitigate

We recommend converting web content to HTML. If you can't, you must mitigate existing PDFs to meet accessibility standards.

Use the source document and program. Tag the document with all the relevant metadata and structure the software needs to produce an accessible PDF.

Things get tough when the source document isn't available, or the software you used doesn't support making an accessible PDF. Adobe Acrobat's accessibility tools can fix issues flagged during a check. This can be complex and time-consuming. Any future updates to the document will need manual mitigation again. Are you sure this PDF can't be web content?

Fix your workflow

PDFs are a symptom, not the root cause.

An organization's people, processes, and habits create the conditions for a website chock full of PDFs. These internal processes may have nothing to do with the website, but their output often ends up online. Changing to a digital-first workflow takes time, training, and organizational will.

Here are some common scenarios we see and what to try instead.

Subject matter experts can't or won't publish content to the web

Sometimes, the person who writes the content can't or won't publish it to the web in HTML format. They don't have time, they don't know how—reasons vary. In these cases, someone in a web editorial role can work with subject matter experts (SMEs) to verify the accuracy of content, format it for the web, and get sign-off to publish. This person acts as a liaison between SMEs and the website to get content online in a format that people can access. At the same time, they support the ability of SMEs to create content. As content needs get more complex, it helps differentiate between these roles.

In smaller organizations, educate SMEs to create good web content and publish it themselves.

Web editors aren't empowered to make changes to content

In some organizations, the people who publish content aren't the ones who created it—and they don't have the authority to make changes. If editors can't transform PDF content into something accessible and accurate, their only option is to upload the PDF as-is.

Reduce your dependence on PDFs by revising your workflow. Include the SME in review and sign-off, and empower the editor to make changes to suit the context.

Internal workflow reliant on PDFs

Human resources departments and other occupations that use a lot of forms may have internal workflows that rely on PDF input. Consider where in the process you can use web forms instead. If it's impossible to cut out all PDFs, create supporting documentation. How-to HTML pages help users understand the process without downloading a form first.

It takes time to change a print-reliant culture, so start small. Look for a few short but high-impact PDFs that you could convert to web forms first.

The CMS is hard to use

Some content management systems weren't created with the needs of authors in mind. Folks using these systems get frustrated or avoid the work. Uploading PDF from Word seems like the fastest option for communicating important information. Minor adjustments to form fields and permissions could help—or a redesign may be in your future. (We know a guy.)

Formal or policy documents that are rarely or never updated

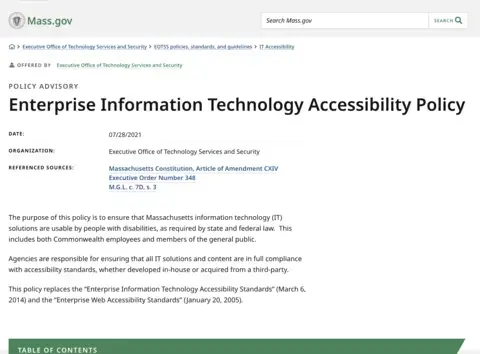

Use document structure, timestamps, and design to make a web page look permanent and authoritative. Visual design and structured content can help users understand the context they need to interpret the document.

Public policy, law, and code are good candidates for this treatment, which state governments often upload in PDF format.

Work with your site builders to create a unique content type, including the fields and attributes needed to communicate necessary information: time and date, contact person, referenced sources, and a flexible content body area with options for semantic headers. A taxonomy vocabulary or tagging system to identify the originating organization and intended audiences can connect content across your site. (PDFs definitely can't do that.)

Manuals or other lengthy documents

Convert these to native web content. Web pages are easier to keep up-to-date as technology or processes change. If users need offline access, support PDF as an alternate format. A table of contents component makes it easier for users to browse long-form documents by skipping from header to header.

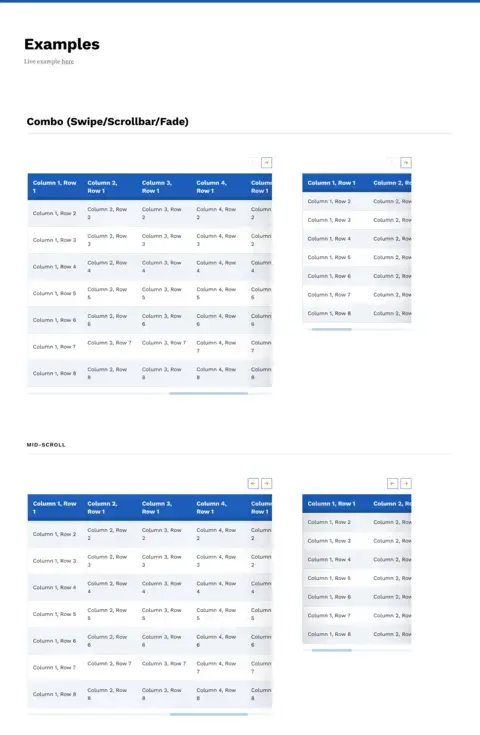

Content includes images, tables, branding, or other graphics

PDFs can look like brochures or include graphics or branding in ways that may not be possible on their website. Folks can be reluctant to let go of this design freedom.

Work with your web publishing team to translate the information you need to include into native web content. A design system with table styles, a CMS with the ability to embed data visualizations, and robust landing pages with graphic imagery are all ways to help avoid PDFs.

Your CMS might already have these capabilities, but no one knows about them, or there are no user guides. Time for a lunch-and-learn with your team or some system documentation.

Printable content

Modern web design practices make it easy to create web pages that look great when printed. Attach the PDF as a secondary content source to the main HTML page. Let your development team know if this is a priority for your content.

When you have to use a PDF

Is there a place for PDFs on your website? Many content strategists would say, "Sure—in the trash." For that rare, required PDF, ensure people have the best possible experience.

- Create an accessible PDF from the start. Make sure your PDF text is machine-readable. Do not use image-only PDFs. Tag PDFs and add internal metadata.

- Add alt text for images, charts, graphs, and other visuals to describe the information displayed.

- Use visual hierarchy and plain language.

- If your document has fields for users to fill out, make these fields interactive.

- Don't force downloads. Allow PDFs to open in a user's browser.

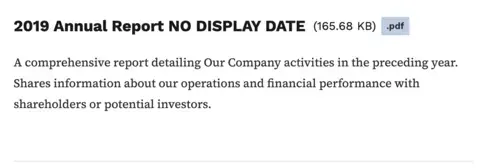

- When linking to a PDF, note the file type and include the file size. You may be able to set up your templates to output this information automatically.

Persevere

It's hard to break a PDF habit without resources to support a proper web editorial workflow. Most folks are doing the best they can with the tools they have available.

Many organizations won't have the budget, time, or wherewithal to make the change away from PDFs. If you're at one of these places, don't fret. Audit your PDFs—even just the most popular ones—and start small. Your work can make the case for further investment.

And your users will thank you.

Other links and sources: